Evaluation /

How Have HCI Evaluation Methods Transformed to Enhance UX?

The progression of HCI evaluation techniques over recent decades provides insight into the field’s growing intricacy and deepening comprehension of user experience (UX) qualities. In the 1980s, early discount evaluation methods like heuristic models and cognitive walkthroughs focused substantially on task completion, learnability and usability issues. But as the web and digital systems enabled advanced data collection, evaluation embraced more holistic UX understanding. Researchers like Effie Law began quantifying aesthetics, satisfaction and adoption that uncovered emotional and subjective facets of experience. The rise of “evaluating in the wild” leveraged log analysis and embedded assessment to understand complex longitudinal engagement. Evaluation’s expansion also crucially involved a participatory shift towards engaging users as co-creators instead of just subjects. Techniques like cooperative inquiry, cultural probes and data-enabled storytelling spotlighted new collaborative opportunities. Today HCI evaluation retains foundational metrics but synthesizes expansive views of experience shaped by subjective juxtapositions of usability, emotion, aesthetics, and meaning unfolding uniquely for each user. This ceaseless evolution of methodology continues progressing interaction science toward more profound and multidimensional insight into human technology relationships.

Designing for Usability: Key Principles and What Designers Think

John D. Gould, Clayton Lewis · 01/03/1985

The paper "Designing for Usability: Key Principles and What Designers Think" by John D. Gould and Clayton Lewis is a notable contribution to the field of User Experience (UX) Design, particularly focusing on usability principles. It emphasizes the importance of usability in the design process and how it can significantly impact the effectiveness and efficiency of a product.

- Usability in Design: The paper underscores the centrality of usability in the design process. It argues that for a product to be successful, it must not only be functional but also user-friendly and easy to navigate. This approach marks a shift from focusing solely on the technical aspects of design to considering the user's experience and interaction with the product.

- Key Principles of Usability: Gould and Lewis outline several key principles that should guide the design process. These include simplicity, clarity, and a user-centric approach. The paper insists that designs should be intuitive, minimizing the learning curve for users and enhancing their overall interaction with the product.

- Designer Perspectives: The authors explore the views and approaches of designers towards usability. They highlight the gap that often exists between designers' intentions and users' experiences. By understanding how designers think about usability, the paper suggests ways to bridge this gap and create more effective designs.

- Practical Implications and Challenges: The paper discusses the practical implications of these usability principles in the design process. It also addresses the challenges designers face in implementing these principles, such as balancing aesthetic appeal with functionality and the constraints of technical feasibility.

Impact and Reflections: This work has significantly influenced the field of UX design, advocating for a paradigm where usability is a fundamental consideration. However, the paper also recognizes that the implementation of these principles can be complex, requiring designers to continuously adapt and evolve their strategies in response to user feedback and technological advancements.

Read more

Designing the User Interface: Strategies for Effective Human-Computer Interaction

Ben Shneiderman, Catherine Plaisant · 01/03/1988

Shneiderman's seminal book provides fundamental methods and principles for designing user interfaces. It has significantly contributed to the field of Human-Computer Interaction (HCI) by establishing widely adopted design guidelines.

- Eight Golden Rules of Interface Design: These rules promote consistency, feedback, simple error handling, and a clear, minimalist design. They are fundamental guidelines that support efficient, comfortable user interfaces.

- Direct Manipulation Concept: Shneiderman introduces this principle, explaining that usability is improved when users feel they are directly interacting with objects on the screen, enhancing user control and reducing cognitive load.

- Universal Usability: Advocating for inclusivity, the book presents vital methods for making systems accessible to a diverse user base, emphasizing it as a core responsibility of UI designers.

- Evaluation Methods: Shneiderman demonstrates evaluation techniques such as usability testing and heuristic evaluation, advocating its incorporation in design processes to improve user interface quality.

Impact and Limitations: Shneiderman's work was instrumental in shaping the fields of HCI and UI design, to design efficient, inclusive, and accessible interfaces. However, the book doesn't thoroughly address emerging technologies like AR/VR. Further research applying these principles to such interfaces could be beneficial.

Read more

Cognitive Work Analysis: Toward Safe, Productive, and Healthy Computer-Based Work

Kim J. Vicente · 01/04/1999

This seminal book presents a novel framework for human-computer interaction (HCI) called Cognitive Work Analysis (CWA). CWA prioritizes safety, efficiency, and wellness in computer-based work environments.

- Cognitive Work Analysis (CWA): This analytical framework focuses on understanding how people interact with technologies and suggests ways to improve efficiency, safety, and health in computerized workplaces.

- Ecological Interface Design (EID): As a product of CWA, EID advocates for user interface design that complements and supports human cognitive abilities, enabling more effective interaction with technology.

- Safety Culture: The book highlights the importance of fostering a safety culture within organizations, suggesting that CWA can be instrumental in this endeavor as it foregrounds human-technology interactions.

- Human-centered Design: Vicente urges the need for a human-centered approach in designing technology, a principle that has shaped the field of HCI.

Impact and Limitations: The book has made significant strides in tacitly emphasizing the need for user-centered design approaches in HCI. It imperfectly, however, glosses over the diversity of users' cognitive abilities. Future research should focus on inclusivity in cognitive user models to cater to a broader spectrum of users.

Read more

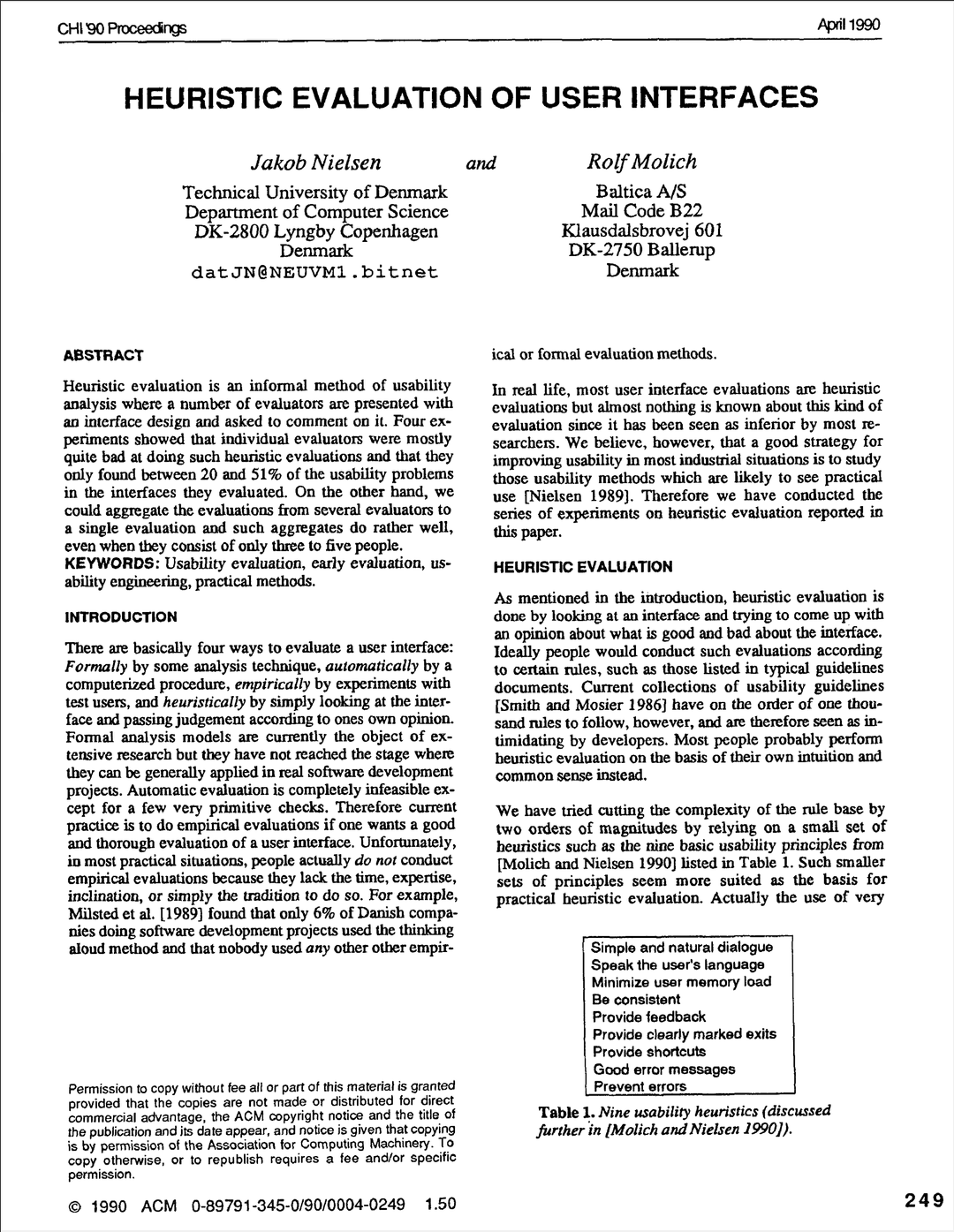

Heuristic Evaluation of User Interfaces

Jakob Nielsen, Rolf Molich · 01/04/1990

The 1990 paper by Nielsen and Molich introduced "Heuristic Evaluation," a user-centered inspection method for quickly identifying usability problems in user interfaces. Situated within the growing focus on user experience, this paper provided a cost-effective alternative to user testing, solidifying its place as a fundamental HCI evaluation technique.

- Usability Heuristics: Nielsen and Molich proposed a set of general principles or 'heuristics' for interface design. These heuristics, like "Visibility of system status" and "User control and freedom," became touchstones for evaluating an interface's usability.

- Expert Evaluation: The paper stressed that usability experts can apply these heuristics to identify most usability issues effectively and efficiently, reducing the reliance on extensive user testing.

- Cost-Effectiveness: The authors argued that heuristic evaluation is less resource-intensive than traditional usability testing, making it accessible for smaller projects and iterative design processes.

- Iterative Design: Nielsen and Molich endorsed the value of performing heuristic evaluations at multiple stages of design, facilitating ongoing refinement based on user needs.

Impact and Limitations: The paper's introduction of heuristic evaluation has had a lasting impact, offering a structured approach for assessing user interfaces. However, its limitations include potential oversight of user-specific issues and a reliance on expert judgment, leaving room for subjectivity and bias.

Read more

Cognitive Walkthroughs: A Method for Theory-Based Evaluation of User Interfaces

Peter G. Polson, Clayton Lewis, John Rieman, Cathleen Wharton · 01/11/1992

This paper introduces a new method to HCI called Cognitive Walkthroughs, aiming to evaluate design and usability of user interfaces. It represents breakthrough in evaluating interfaces in a practical, theory-driven way.

- Cognitive Walkthroughs: The method revolves around defined tasks, allowing evaluators to predict possible user difficulties when learning new systems, empowering efficient interface designs.

- Theory-Based Assessment: The procedure integrates theoretical insights into evaluation process, making a more scientifically reliable interface assessment.

- Interface Design Improvement: Helps designers create more user-friendly, intuitive systems by predicting and tackling usability problems during the design phase.

- Efficiency versus Effectiveness: Highlights the importance of balancing task efficiency and design effectiveness, demonstrating that solutions prioritizing efficiency may sometimes compromise interface intuitiveness.

Impact and Limitations: Cognitive Walkthroughs has provided new perspectives for designers and researchers in predicting and addressing interface design challenges, helping to make technology more user-friendly. However, it has limited application when evaluating complex systems or socio-technical interactions and more data is required to establish its robustness. Future research can extend the models used in this method to make it more versatile and context-specific.

Read more

A Mathematical Modeling of the Finding of Usability Problems

Jakob Nielsen, Thomas K. Landauer · 01/04/1993

The 1993 paper by Jakob Nielsen and Thomas K. Landauer presents a mathematical model for predicting the number of usability problems discovered in interface evaluation. Positioned at the intersection of HCI and statistics, the paper adds quantitative rigor to the often qualitative domain of usability testing.

- Usability Problem Discovery: The paper introduces a mathematical model, based on Poisson processes, to predict the probability of discovering usability problems during testing, thereby providing a scientific basis for usability evaluation.

- Resource Allocation: The paper stressed that usability experts can apply these heuristics to identify most usability issues effectively and efficiently, reducing the reliance on extensive user testing.

- Generalizability: By utilizing the model, practitioners can make informed decisions about the allocation of resources in usability studies, such as the number of testers required to uncover a certain percentage of problems.

- Efficiency Metrics: The paper also discusses how the model can help in assessing the cost-effectiveness of different usability testing methods, a critical factor in practical application.

Impact and Limitations: The paper has had a transformative effect on how usability studies are designed and interpreted, adding a layer of quantitative analysis to a primarily qualitative field. However, the model is based on the assumption that each tester is equally likely to find any given issue, an assumption that may not hold in more complex, specialized systems.

Read more

Usability Inspection Methods

Jakob Nielsen · 01/04/1994

Jakob Nielsen's 1994 paper "Usability inspection methods" discusses usability testing methods, an integral aspect of HCI. The paper's chief contribution is the establishment of the correlation between usability methods and system success.

- Cognitive Walkthroughs: Nielsen introduces this method where experts try to perform tasks from a user's perspective. It focuses on understanding the user's thought process and problem-solving approach.

- Heuristic Evaluation: A user-focused strategy, where evaluators following a set of usability principles identify usability issues in design. Nielsen’s ten usability heuristics are still widely applied in modern user-centred design.

- Think Aloud Protocol: Users express their thoughts while performing tasks in this method. It helps understand user psychologies and decision-making processes during task performance.

- Pluralistic Walkthroughs: With diverse groups of users, this method allows understanding varied perceptions and issues, helping create a more comprehensive design.

Impact and Limitations: Nielsen's testing methods continue to shape HCI research and practice. For example, heuristic evaluation guides user-interface design. However, the research's primary limitation is it might not cover all possible real-world settings or users. Additional research must focus on methods to cater to a broader set of user personas and contexts.

Read more

Research through Design as a Method for Interaction Design Research in HCI

John Zimmerman, Jody Forlizzi, Shelley Evenson · 01/01/2007

"Research through Design as a Method for Interaction Design Research in HCI" by Zimmerman, Forlizzi, and Evenson addresses the gap between design and research within the HCI community. It introduces a new model termed "Research through Design," aimed at integrating design strengths into HCI research. This model allows for the creation of innovative solutions that transform the world from its current state to a preferred state, essentially "making the right thing."

- Research through Design (RtD): The paper posits RtD as a novel approach that leverages designers' unique ability to tackle under-constrained problems. This offers a mechanism for integrating design-based problem-solving skills into HCI research.

- Four Lenses: To evaluate the impact and contribution of RtD, the authors introduce a set of four lenses: invention, relevance, extensibility, and rigor. These lenses serve as criteria to critically assess the quality and implications of design-based HCI research.

- Under-Constrained Problems: RtD is especially potent in addressing what the authors term "under-constrained problems," which lack clear parameters and solutions. This focus enables designers to contribute their expertise to a broader range of research challenges.

- Case Examples: The paper offers three case examples to illustrate the utility of this method, serving as a template for how designers can generate research insights from their work.

Impact and Limitations: This paper substantially influences the HCI research community by providing a structured framework that recognizes the value of design thinking. The "Research through Design" model legitimizes design as a research methodology in HCI, offering a symbiotic relationship between design and research. However, the model's effectiveness still requires extensive empirical validation to firmly establish its credibility and utility within the community.

Read more

Measuring the User Experience on a Large Scale: User-Centered Metrics for Web Applications

Kerry Rodden, Hilary Hutchinson, Xin Fu · 01/04/2010

This paper revamps the HCI field by addressing the challenge of quantitatively evaluating user experience (UX) at a large scale, focusing on web applications. The authors propose a framework for developing user-centered metrics.

- User-centered metrics: These are metrics designed to measure the user's subjective experiences. They provide valuable insights for practitioners who need a mechanism to evaluate the UX on a quantitative scale.

- UX quantification: The paper introduces methods for quantifying a user's experience. This allows businesses and developers to track user satisfaction and identify areas for improvement.

- Framework adaptation: The authors suggest this framework can be adapted to different web applications, giving it a wide application range for improving UX understanding and management.

- Benchmarking: The paper emphasizes the role of regular benchmarking in evaluating and improving UX over time.

Impact and Limitations: Their work revolutionized how businesses quantify and improve UX, making the web more user-friendly. However, quantifying a user's subjective experience has inherent challenges and biases. Further studies could explore methods to reduce these biases and improve the framework’s reliability.

Read more

Measuring the User Experience

Tom Tullis, Bill Albert · 01/05/2013

"Measuring the User Experience" by Tom Tullis and Bill Albert is a pivotal book in the HCI field that aims to bridge the gap between qualitative and quantitative analysis of user experiences. It provides practitioners with methods for rigorous, data-driven evaluation of usability and user engagement.

- Quantitative Metrics: The book introduces key performance indicators like task success rate, error rate, and time-on-task, enabling designers to quantify user experience. This is crucial for data-driven decision-making processes in interaction design.

- Qualitative Insights: While emphasizing the quantitative, the book also discusses how qualitative methods can supplement data, providing a holistic view of user experience. It advises on integrating methods like interviews and surveys for nuanced perspectives.

- Test Design: It offers guidelines for effective user testing setups, including test scripts and scenarios, facilitating a more structured approach to usability testing, thus maximizing the value of each test session

- Statistical Analysis: The book demystifies the statistical techniques essential for analyzing usability data, making it accessible to those who may not have a background in statistics but still need to interpret data.

Impact and Limitations: The comprehensive approach to UX metrics has made this a go-to resource for both academics and industry professionals. However, while it covers the how-to of metrics, there's less emphasis on the 'why', which could lead to potential misuse of metrics without understanding their contextual relevance. Future work could focus on deeper integration of metrics with specific user contexts or technological paradigms.

Read more